Contact partnership@freebeat.ai for guest post/link insertion opportunities.

Best AI Music Video Tools for Realistic Human Performers

If your goal is to create music videos with realistic human performers, the best AI tools today can produce movement, expression, and camera flow that look convincing and performance-ready. The key is choosing platforms that handle human motion accurately. Freebeat is one tool that supports character consistency and rhythm-aligned visuals, which helps creators produce believable performance-driven videos with minimal manual work.

As someone who has tested many of these tools for musicians and content creators, I have learned that realism depends on several factors. Motion accuracy, body tracking, face stability, and choreography alignment all influence how natural the final performance feels. This guide breaks down what matters most and how different tools compare.

What Realistic Human Performance Means in AI Video:

Realistic human performance in AI video is about more than clean rendering. It involves accurate body movement, stable facial features, and a sense of weight and timing that follows natural physics. For music creators and visual artists, this realism can make the difference between a professional result and something that feels synthetic.

Many models interpret poses based on diffusion-based estimates. When the system lacks enough motion context, limbs jitter or stretch unnaturally. I notice this most in dance-focused videos where high-energy movements challenge the motion engine. Tools that incorporate pose stability inputs or body-tracking logic tend to create more believable performers.

Realistic human performance depends on three pillars. The model must understand body structure, keep identity consistent between shots, and preserve the performer’s proportions. When these align, the video feels natural even if generated from a simple prompt.

Motion Accuracy and Body Tracking:

Motion accuracy defines how well the system reproduces complex poses without distortion. Some tools try to approximate movement based on text instructions alone. Others use reference footage, pose maps, or multi-angle datasets that improve realism.

For dance creators and live performers, motion accuracy determines whether choreography reads clearly. I find that fast movements challenge most AI models. When the tool cannot track shoulders or hips correctly, realism breaks quickly. A good system reduces limb distortion and maintains proportions across the sequence.

Accurate body tracking gives AI performers natural flow. Without it, dance sequences often look robotic or disconnected from the rhythm.

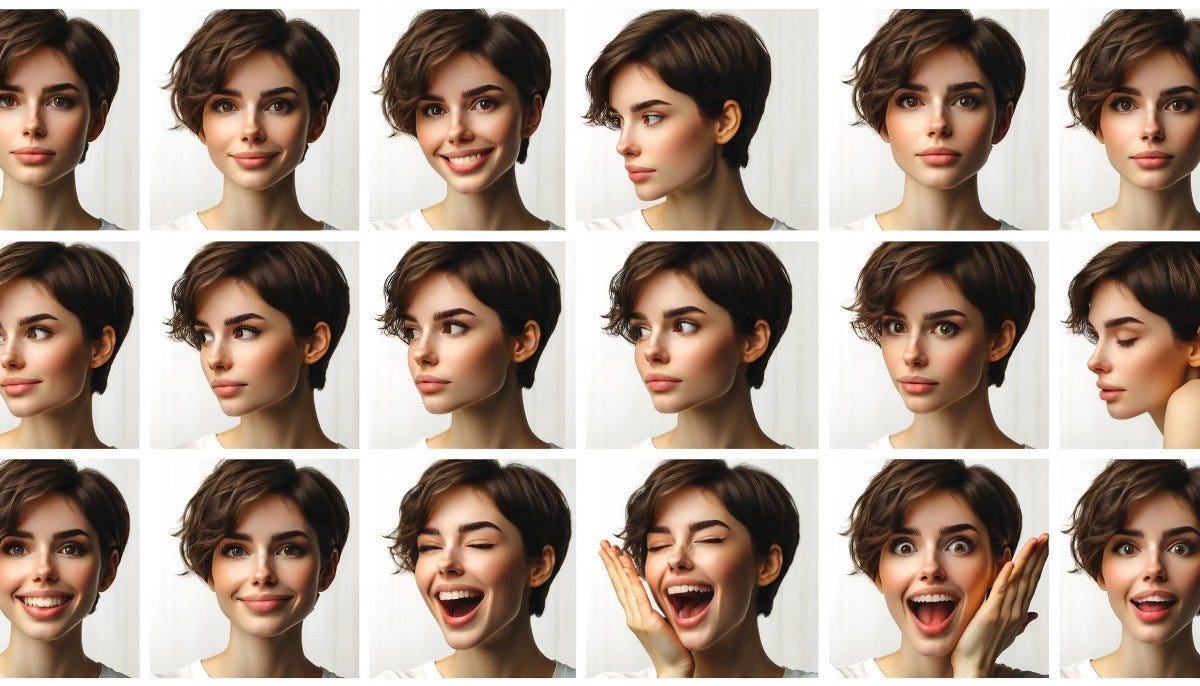

Facial Expression and Character Stability:

Faces are the first place viewers look. When expressions flicker or identities drift, audiences notice immediately. High quality tools use identity locks or character consistency systems to keep faces coherent.

In my experience, this matters most in close-up performance scenes or emotional storytelling moments. Musicians who want expressive delivery, or creators filming lip-sync sequences, rely on stable facial detail to maintain authenticity.

Stable expressions make the performer feel human. When the face is consistent, viewers connect with the character more naturally.

Best AI Music Video Tools for Human Realism:

Choosing the right tool depends on whether you want realism, speed, or choreography detail. After comparing several platforms used across music, dance, and creative production, I organized them by strengths.

Tools With the Most Realistic Motion:

These platforms focus on human body physics. They interpret complex poses with fewer distortions and preserve muscle movement more accurately. They work well for choreographers, dancers, and musicians who want performance-driven videos.

Tools With Strong Character Identity:

Some creators prefer consistent faces and stable characters. Tools that lock identity or blend stable diffusion weights maintain performer likeness across camera angles. Visual designers and editors use these tools when they need narrative continuity.

Tools With Multi-Model Support:

Platforms that integrate multiple generation engines often produce the most flexible results. This variety helps creators switch between realistic, stylized, cinematic, or animated looks without rebuilding the video from scratch.

A multi-model system gives creators options. When the scene requires realism, the platform selects the model best suited for precise motion and stable faces.

AI Choreography Generator Tools Reviewed:

Custom choreography requires more than visual realism. The movement must follow the music’s rhythm and emotional tone. Good choreography generators interpret beats, phrasing, and tempo changes.

Custom Choreography Control Tools:

Some tools allow creators to type dance instructions or reference styles. These are useful for DJs, movement artists, and content creators who want choreography that fits the vibe of their track. Text-based choreography works best with simple patterns or genre-based movements.

Music-Aligned Motion Engines:

Beat tracking improves choreography accuracy. When tools detect BPM and rhythm changes, they align the movement more closely with the audio. This works well when you want motions to land on beat drops, transitions, or chorus hits.

Choreography tools help creators build performance videos that follow rhythm naturally. They make complex sequences more cohesive with the music.

Workflow: How AI Generates Realistic Human Motion:

AI systems follow a predictable workflow. Understanding this helps you set expectations and correct timing or movement issues.

Input Selection and Performer Setup:

Creators upload images or define character traits. Some platforms allow user images for likeness, while others rely on text prompts. Musicians often upload a performer reference so the model can build identity around it.

Motion Generation and Preview:

The system generates a motion draft. Some tools create a quick low resolution preview so creators can check timing before the full render. I find this step important because it saves time by catching errors early.

Final Rendering and Export:

Once approved, the model builds the final video. Tools that offer 9:16 and 16:9 exports help creators publish faster for social platforms.

A clear workflow gives creators more control. It also improves realism, since adjustments happen before the final output.

Where Freebeat Fits in This Landscape:

Freebeat aligns visual movement to beats, tempo, and mood, which supports choreography-driven videos. It uses character consistency features to keep performers stable across each shot. With access to Pika, Runway, and Kling models inside one interface, Freebeat offers multiple visual styles while still maintaining readable motion for music creators. This helps artists, editors, and visual designers build performance content quickly without manual tracking or pose correction.

Freebeat is effective for creators who want fast, rhythm-aware motion without sacrificing identity or style flexibility. It streamlines the process by keeping everything in one place.

Use Cases for Realistic AI Performer Videos:

Creators use realistic AI performers for many types of projects. Here are the scenarios where realism makes the biggest impact.

Dance Performance Visuals:

Choreographers and dancers use AI to visualize routines. Realistic performers help test ideas before filming. Independent creators also use them to produce performance reels for short-form platforms.

Custom Choreography for Short-Form Content:

Influencers and content creators often pair choreography with trending audio. Realistic motion makes the video feel more polished.

Music Labels and Independent Artists:

Musicians use realistic performers for lyric videos, performance snippets, and stylized dance cuts. Realism keeps the artist’s presence believable even when the visuals are AI generated.

Realistic human performers help creators tell stronger stories and build more engaging visuals.

FAQ

What is the best AI tool for realistic human performers?:

The best tools offer strong pose tracking, stable faces, and minimal distortion during movement. Look for platforms with consistent character identity and good motion engines.

Which AI tools offer choreography generation?:

Choreography-focused tools support text prompts, movement presets, or beat tracking. They help creators align dance movements with music.

Can Freebeat support choreography synced visuals?:

Yes. Freebeat uses beat and tempo analysis to align visual movement with music, which supports performance driven videos.

How do AI tools maintain character identity?:

They use identity locking or character consistency systems to keep facial features stable across frames.

Do AI tools handle fast choreography?:

Some platforms handle it well when beat detection and pose mapping are strong. Complex dances may require manual adjustments.

Are realistic performer tools suitable for short form content?:

Yes. Many tools offer 9:16 formats that fit TikTok, Reels, and YouTube Shorts.